K-12 Computer Science Teachers Problems of Practice

Recently, K-12 teachers in Indiana spoke to us during #CSTAPDWeekIN (CSTA PD Week in Indiana) about problems of practice that they have experienced or witnessed during their time teaching CS. We highlight here a few of their thoughts.

—-

K-12 CS teachers from Indiana recently shared problems of practice with us that they have witnessed or experienced related to computer science education. Three of these addressed the lack of trained teachers and how that impacts schools and students, misconceptions about who belongs in CS, and the lack of funding for CS education despite state-wide mandates.

Lack of trained teachers

Danielle Carr, CS teacher at Lake Central High School and CS instructor for IndianaComputes!, said, “I wish they (researchers) knew that many of the teachers teaching CS don’t have a background in CS.” Echoing this, Jennifer Hanneken, school library media specialist at Lawrenceburg Community School Corporation, noted that, “Schools need to be required to have computer science taught by a qualified, certified teacher. They need to understand the enormity of the standards.” This is especially true if we are to achieve equitable learning outcomes so that all students receive quality computer science instruction. Of the 35 teachers we spoke with, nearly one-third indicated that they were second career teachers who came from industry and were asked to teach CS specific courses. Nearly half of the teachers were asked or voluntold to teach CS in their schools with limited to no background in CS specific content. This phenomenon is not unique to Indiana and is a known issue in other places around the country and the world. It speaks to how important it is to consider this in our research, particularly how it relates to student outcomes.

Misconceptions about who belongs

Teachers were also aware of the critical aspect of student and teacher perceptions about “who” belongs in CS. Carr further said, “There is a stigma of who ‘should/can’ do computer science”, which inevitably impacts recruitment to courses. Carrie Koontz, 6th grade science teacher at Edgewood Junior High School, echoed this, “Students consider CS as a subject for certain people, not for everyone.” While national statistics (such as those provided by Code.org) display an increase of students studying CS who are from historically excluded populations, there is still a long way to go. Equitable CS education is and continues to be a critical goal that must be achieved both in Indiana and nationwide if we want to broaden participation. Overcoming the notion of who “belongs” is an important first step.

Funding for all aspects of CS education

One of the teachers, Kathryn Dunphy, a K-5 teacher at Avon Community School Corporation, said that “there is an assumption of what Computer Science is, so funding is not provided for what is needed, outside of maybe computers.” Several other teachers said that lack of funding for CS education programs, manipulatives for younger students, and related resources are often pushed to the bottom of budgets each school year. As the policies move forward in state after state and district after district, policymakers and legislatures must ensure that mandates are funded adequately to ensure the best learning outcomes for their students.

Jennifer Hanneken

Danielle Carr

Interested in learning about more problems of practice? Click here

Dr. Satabdi Basu is a Senior CS Education Researcher at SRI International. She has published numerous articles on CS education research, particularly focused on computational thinking and K-12 students. She has presented at national and international conferences, and also been invited as a keynote speaker.

Dr. Satabdi Basu is a Senior CS Education Researcher at SRI International. She has published numerous articles on CS education research, particularly focused on computational thinking and K-12 students. She has presented at national and international conferences, and also been invited as a keynote speaker. David Weintrop is an Assistant Professor in the Department of Teaching & Learning, Policy & Leadership in the College of Education with a joint appointment in the College of Information Studies at the University of Maryland. His research focuses on the design, implementation, and evaluation of accessible, engaging, and equitable computational learning experiences. His work lies at the intersection of design, computational science education, and the learning sciences. David has a Ph.D. in the Learning Sciences from Northwestern University and a B.S. in Computer Science from the University of Michigan.

David Weintrop is an Assistant Professor in the Department of Teaching & Learning, Policy & Leadership in the College of Education with a joint appointment in the College of Information Studies at the University of Maryland. His research focuses on the design, implementation, and evaluation of accessible, engaging, and equitable computational learning experiences. His work lies at the intersection of design, computational science education, and the learning sciences. David has a Ph.D. in the Learning Sciences from Northwestern University and a B.S. in Computer Science from the University of Michigan.

Bishakha Upadhyaya is a Senior at

Bishakha Upadhyaya is a Senior at

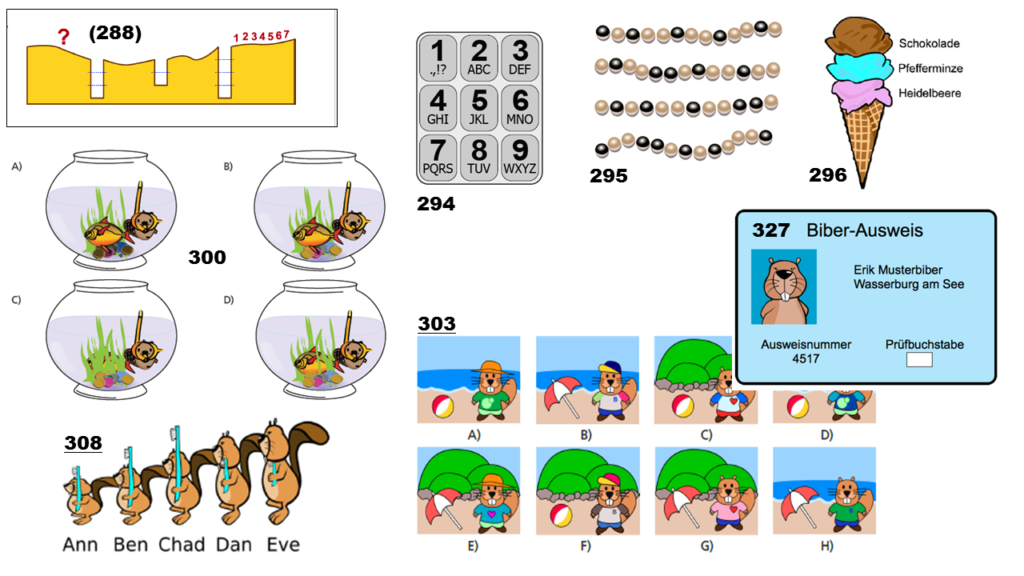

Peter Hubwieser, Technical University of Munich (Germany), taught math, physics and computer science at high schools until 2001. In 1995 he completed his doctoral studies in physics. In 2000 he acquired his postdoctoral teaching qualification (habilitation). In 2002 he was appointed to a professorship position at TUM. He has worked as visiting professor in Austria (Klagenfurt, Salzburg and Innsbruck), France (ENS in Paris and Rennes) and Michigan (MSU). His research activities focus on the empirical investigation of learning processes in computer science. His novel didactical approach triggered the introduction of computer science as a compulsory subject at Bavarian Gymnasiums in 2004.

Peter Hubwieser, Technical University of Munich (Germany), taught math, physics and computer science at high schools until 2001. In 1995 he completed his doctoral studies in physics. In 2000 he acquired his postdoctoral teaching qualification (habilitation). In 2002 he was appointed to a professorship position at TUM. He has worked as visiting professor in Austria (Klagenfurt, Salzburg and Innsbruck), France (ENS in Paris and Rennes) and Michigan (MSU). His research activities focus on the empirical investigation of learning processes in computer science. His novel didactical approach triggered the introduction of computer science as a compulsory subject at Bavarian Gymnasiums in 2004.  Miranda Parker is a Postdoctoral Scholar at the University of California, Irvine, working with Mark Warschauer. Her research is in computer science education, where she is interested in topics of assessment, achievement, and access. Dr. Parker received her B.S. in Computer Science from Harvey Mudd College and her Ph.D. in Human-Centered Computing from the Georgia Institute of Technology, advised by Mark Guzdial. She has previously interned with Code.org and worked on the development of the K-12 CS Framework. Miranda was a National Science Foundation Graduate Research Fellow and a Georgia Tech President’s Fellow. You can reach Miranda at

Miranda Parker is a Postdoctoral Scholar at the University of California, Irvine, working with Mark Warschauer. Her research is in computer science education, where she is interested in topics of assessment, achievement, and access. Dr. Parker received her B.S. in Computer Science from Harvey Mudd College and her Ph.D. in Human-Centered Computing from the Georgia Institute of Technology, advised by Mark Guzdial. She has previously interned with Code.org and worked on the development of the K-12 CS Framework. Miranda was a National Science Foundation Graduate Research Fellow and a Georgia Tech President’s Fellow. You can reach Miranda at