Constructivism and Sociocultural

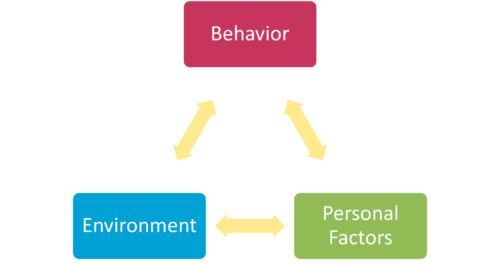

Behaviorism highlighted the influence of the environment, information processing theory essentially ignored it, and social-cognitive theory tried to strike a balance between the two by acknowledging its potential influence. Constructivist (also known as sociocultural) theorists take it a step further.

According to constructivist theories (which can either focus more on individual or on societal construction of knowledge; Phillips, 1995), knowledge and learning are inherently dependent on the cultural context (i.e., environment) to which one belongs. That may sound like repackaged behaviorism, but “the environment” to a constructivist goes far beyond stimuli, rewards, and punishments.

In constructivist theories of learning, “the environment” includes our family dynamics, friends, broad cultures and specific subcultures of groups with which we associate, and numerous other factors which all influence our learning. Although all constructivist theories may not agree on one single definition of learning, for our purposes a basic definition suffices: learning is development through internalization of the content and tools of thinking within a cultural context.

Constructivist theories posit that one’s culture provides the tools of thinking, which in turn influence how we learn—or “construct” knowledge. Perhaps the best-known constructivist theorist is Lev S. Vygotsky. Vygotsky authored many papers and two books, which were eventually published together posthumously as a single book titled Mind in Society.

In this collection of Vygotsky’s work, concepts such as internalization and the zone of proximal development (ZPD) are introduced (Vygotsky, 1978). Briefly, constructivist learning theories posit that something is learned when a person internalizes its meaning—internalization is an independent developmental achievement.

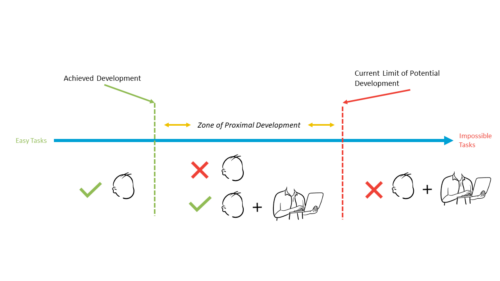

Further, the ZPD encompasses tasks that a learner cannot yet complete independently but which they can complete with help (see Figure below). Constructivist theorists target the ZPD for optimal learning, and if successfully done, learners construct their own meaning of the new information, thereby internalizing it.

Vygotsky asserted that thoughts are words. That is, thoughts are inextricably tied to the language we use. In his view, one can not “think” a thought without first having a word for the thought. For example, one cannot think about a sandwich if they have not first internalized the meaning of the word sandwich. In this way, Vygotsky (and by extension many constructivist theorists) view words as the “tools” for cognition and thus higher-order thinking.

Strengths

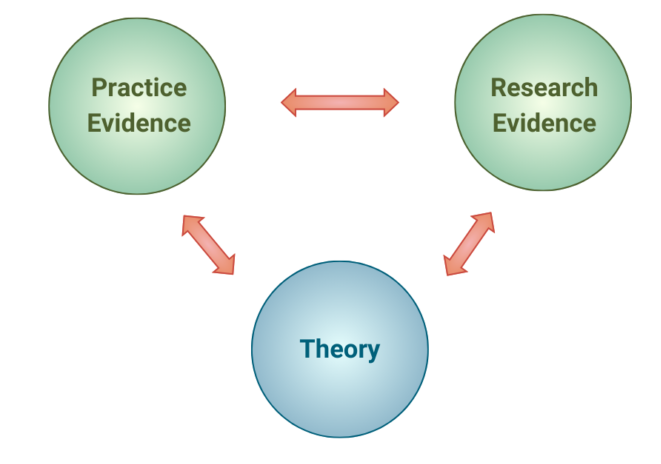

- Constructivist theories are more attentive to learners’ past experiences and cultural contexts than the other major learning theories discussed in this blog series. Because of that, they can provide solid theoretical footing to many research projects focused on addressing disparities.

- The zone of proximal development, internalization, and consideration of words as tools of thought are compelling concepts introduced by constructivist theories.

- The focus on culture and words as tools of thought in constructivist theories can help explain the variety of cognition patterns observed across cultures (e.g., different arithmetic strategies across cultures).

Limitations

- Although constructivist theories prove strong in many aspects, they are not as applicable to other forms of learning. For example, constructivists are almost exclusively concerned with higher-order learning, and they largely cannot account for learning exhibited by animals (like behaviorist theories can).

- Constructivist theories of learning lie on a scale, from more radical to more conservative, regarding the influence of a person’s individual history. While this is not necessarily a bad thing, it does muddy the waters when one refers simply to “constructivism,” which in fact encompasses a very wide swath of perspectives on learning.

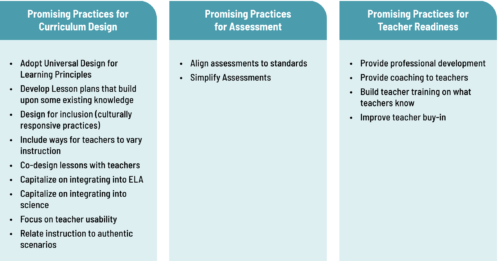

Potential Use Cases in Computing Education

- Research: A researcher may attempt to identify first-year computer science students’ individual zones of proximal development related to coding, and see if teaching coding within this zone enhances students’ motivation, interest, and intention to pursue the content further compared to teaching content outside the zone.

- Practice: Teachers need to ensure all students have (accurately) internalized the meanings of key vocabulary terms/concepts related to their content (e.g., what “objects” or “classes” really are) before they can rely on students to properly use the concept in higher-order, complex problem solving.

Influential theorists:

- Lev Semyonovich Vygotsky (1896 – 1934)

- John Dewey (1859 – 1952)

- Jean Piaget (1896 – 1980)

Recommended seminal works:

Cobb, P. (1994). Where Is the Mind? Constructivist and Sociocultural Perspectives on Mathematical Development. Educational Researcher, 23(7), 13–20. https://doi.org/10.3102/0013189X023007013

Phillips, D. C. (1995). The Good, the Bad, and the Ugly: The Many Faces of Constructivism. Educational Researcher, 24(7), 5-12. https://doi.org/10.3102/0013189X024007005

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press. https://books.google.com/books?id=Irq913lEZ1QC

References

Phillips, D. C. (1995). The Good, the Bad, and the Ugly: The Many Faces of Constructivism. Educational Researcher, 24(7), 5-12. https://doi.org/10.3102/0013189X024007005

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press. https://books.google.com/books?id=Irq913lEZ1QC