Archive: 2020

Block-based Programming in Computer Science Classrooms

Comments Off on Block-based Programming in Computer Science ClassroomsThis week’s post features David Weintrop and his research on block-based programming. He shares three key points his research has discovered so far.

The first time I saw Scratch, I thought, “Wow! How clever! Is this the end of missing semi-colons errors!?” It was clear to me how the shape of the blocks, their easily understood behaviors, and the Sprites they controlled all worked together to make programming more accessible and inviting.

With my background in computer science, I could also see how foundational programming concepts were also present. I started with Scratch but then discovered a whole host of other environments, like Snap!, MIT AppInventor, Pencil Code, and Alice, that used a similar block-based approach. This got me thinking – do kids learning computer science with block-based tools? Should it be used in the Classroom? If so, what is the role of the Teacher? And finally, will block-based help kids learn text-based programming languages like Java and Python? My research seeks to try and answer these questions. Here is a bit of what I have found.

Kids think block-based programming is easier than text-based programming.

As part of my research on block-based programming in K-12 classrooms, I asked students what they thought about block-based programming. For the most part, students perceived block-based programming to be easier than text-based programming. They cited features such as the “browsability” of available commands, the blocks being easier to read than text-based programming, and the shape and visual layout of the blocks. It is also worth noting that some students viewed block-based programming as inauthentic and less powerful than text-based programming.

Kids do learn programming concepts with block-based tools.

My research found that students do in fact learn programming constructs when using a block-based tool. In fact, students who learned to program using a block-based tool scored higher on programming assessments compared to students who learned with a comparable text-based tool. I found a similar result in a different study looking at the AP Computer Science Principles (CSP) exam, which asked students questions in block-based and text-based pseudocode.

May help kids learn text-based languages, but it is not automatic.

I also investigated the transition from block-based to text-based programming in high school computer science classrooms. I found that there was no difference in student performance in learning text-based programming based on prior experience with block-based or text-based programming. In other words, students performed the same regardless of how they had learned programming up to that point. One thing to note is that in my study, the teacher provided no explicit supports to help students make connections between their block-based experience and the text-based language. I mention this only to say that there is still research to be done into how best to support the blocks-to-text transition.

Overall, my research is finding that block-based programming should have a role in K-12 computer science education. While there is still work to be done, what we know so far suggests that block-based programming can serve as an effective introduction to the field of computer science.

David Weintrop is an Assistant Professor in the Department of Teaching & Learning, Policy & Leadership in the College of Education with a joint appointment in the College of Information Studies at the University of Maryland. His research focuses on the design, implementation, and evaluation of accessible, engaging, and equitable computational learning experiences. His work lies at the intersection of design, computational science education, and the learning sciences. David has a Ph.D. in the Learning Sciences from Northwestern University and a B.S. in Computer Science from the University of Michigan.

David Weintrop is an Assistant Professor in the Department of Teaching & Learning, Policy & Leadership in the College of Education with a joint appointment in the College of Information Studies at the University of Maryland. His research focuses on the design, implementation, and evaluation of accessible, engaging, and equitable computational learning experiences. His work lies at the intersection of design, computational science education, and the learning sciences. David has a Ph.D. in the Learning Sciences from Northwestern University and a B.S. in Computer Science from the University of Michigan.

Longitudinal Trends in K-12 Computer Science Education Research

Comments Off on Longitudinal Trends in K-12 Computer Science Education ResearchIn this post, Bishakha Upadhyaya provides highlights of our SIGCSE 2020 paper on trends in K-12 CS Education research (co-authored with Monica McGill and Adrienne Decker). For more details, watch her talk or read the paper.

Research in the field of Computer Science education is growing and so is the data and results obtained from it. Without a comprehensive look at them collectively, it can be difficult to understand the current longitudinal trends in the field. In order to identify the trends in the K-12 computing education research in the US, we conducted a longitudinal analysis of data collected from five different publication venues over the course of 7 years.

For the purpose of this analysis, we looked at the manually curated dataset on csedresearch.org with over 500 articles that focused on K-12 computing education from years 2012 to 2018. As the majority of the articles in the dataset were from the US, we only looked at research papers whose participants were also from the US. We then ran SQL queries on the dataset in order to extract the subsets of data that were later analyzed in Tableau and presented visually using graphs and tables.

Some of the major trends that we were interested in examining were:

- Locations of students/interventions studied

- Type of articles (e.g., research, experience, position paper)

- Program data (e.g., concepts taught, when activity was offered, type of activity, teaching methods),

- Student data (e.g., disabilities, gender, race/ethnicity, SES)

Results revealed that there has been an increasing shift in classroom activities from informal curriculum to formal curriculum. This shift suggests that more research is being conducted within classes offered during school hours, increasing the reach to more students with the availability of more labs, lectures and other teaching methods.

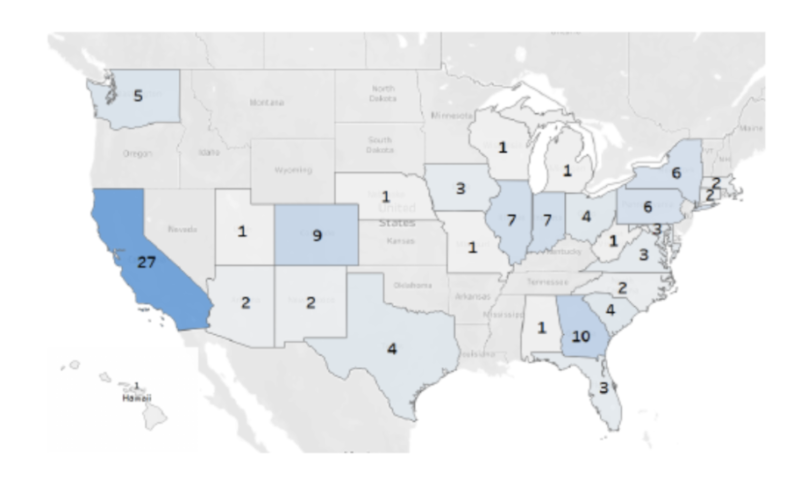

Trends also revealed that the majority of the research papers had student participants based in California. While this may seem reasonable given California is the most populous state in the US, this trend doesn’t follow for Texas, the second most populous state. There were only 4 papers that represented participants from Texas. This suggests that policies and other standards may have an influence over the computing activities and research in the state.

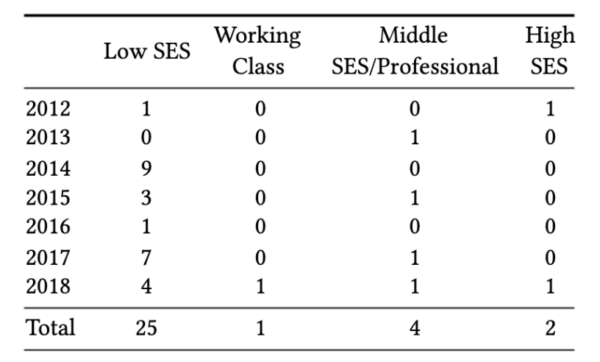

Our analysis revealed another longitudinal trend, various disparities in reporting the student demographics, particularly the socio-economic status (SES) of the students. For the purpose of this analysis, we considered information about free/reduced lunch as low SES if not explicitly reported in the paper. Only 32 of the articles analyzed reported information about students’ SES. Despite previous evidence showing that the SES of the student affects their academic achievement, the underreporting suggests that it is still not being considered in many research studies.

In a field with increasing efforts to increase the number of students from different backgrounds studying computer science, our research has shown considerable disparity in the research landscape of computing education. The lack of reporting makes it difficult for everyone from researchers and educators to policymakers to understand the results of these efforts, especially what needs improving. It is crucial to see how different interventions play out amongst different populations in order to implement and achieve the goals of CS for All.

Bishakha Upadhyaya is a Senior at Knox College, majoring in Computer Science and minoring in Neuroscience. She was the President of ACM-W chapter at Knox for 2019/2020 school year and served as the CS Student Ambassador. She was involved in this research as a part of her summer research project. As a part of her senior research project, she was involved in exploring the enacted curriculum in Nepal, Pakistan, Bangladesh and Sri Lanka. She will be joining Bank of America as a Global Technology Analyst after graduation in Spring 2021.

Bishakha Upadhyaya is a Senior at Knox College, majoring in Computer Science and minoring in Neuroscience. She was the President of ACM-W chapter at Knox for 2019/2020 school year and served as the CS Student Ambassador. She was involved in this research as a part of her summer research project. As a part of her senior research project, she was involved in exploring the enacted curriculum in Nepal, Pakistan, Bangladesh and Sri Lanka. She will be joining Bank of America as a Global Technology Analyst after graduation in Spring 2021.

How to Attract the Girls: Gender-specific Performance and Motivation to learn Computer Science

Comments Off on How to Attract the Girls: Gender-specific Performance and Motivation to learn Computer ScienceIn this blog post, Peter Hubwieser summarizes his work (with co-authors Elena Hubwieser and Dorothea Graswald) that was published in a 2016 journal article. Here, he highlights research exploring the importance of reaching girls earlier through motivation.

The attempt to engage more women in Computer Science (CS) has turned out to be a substantial challenge over many years in many countries. Due to the obvious urgency of this problem, over the last decades many projects have been launched to motivate women to engage in Computer Science. Yet, as already very young girls seem to have different attitudes toward CS compared to even-aged boys, all attempts to influence adult women might come too late.

Potentially, the international Bebras Challenge could provide a facility to arouse the enthusiasm of girls for Computer Science, aiming to promote Informatics and Computational Thinking. During the most recent event, Bebras attracted nearly 3 Million participants from 54 countries, separated in different age groups.

As shown e.g. by Deci and Ryan in 1985, motivation is likely to correlate with the personal experience of competency. Although most participants of Bebras are encouraged to participate by their teachers, they solve the tasks individually or in pairs. Therefore, the individual motivation of the students might play a dominant role in their performance. In addition, the Bebras challenge will have a positive impact on the children only if they are able to solve a satisfying number of tasks.

To find out if this is the case and to detect differences between boys and girls, we analyzed the outcomes of the 2014 challenge regarding the gender of the 217,604 registered participants in Germany. Additionally, we compared the average performance of boys and girls in every task.

The boys were more successful overall, and the differences increased dramatically with age. Nevertheless, it turned out that in the two younger age groups (grade 5-6 and 7-8 respectively), girls outperformed boys in several tasks. The analysis of these tasks demonstrated that in particular, girls can be motivated by the first three factors of the ARCS Model model of motivation (see Keller 1983): Attention, Relevance and Confidence.

After measuring the performance, we grouped the 27 tasks according to the difference in performance of boys and girls:

- Girls’ Tasks: 7 tasks that were solved significantly better by single and paired girls,

- Boys’ Tasks: 13 tasks that were solved significantly better by single and paired boys,

- Neutral Tasks: 7 tasks, either without any significant gender difference or showing such a difference in only one case (singles or pairs).

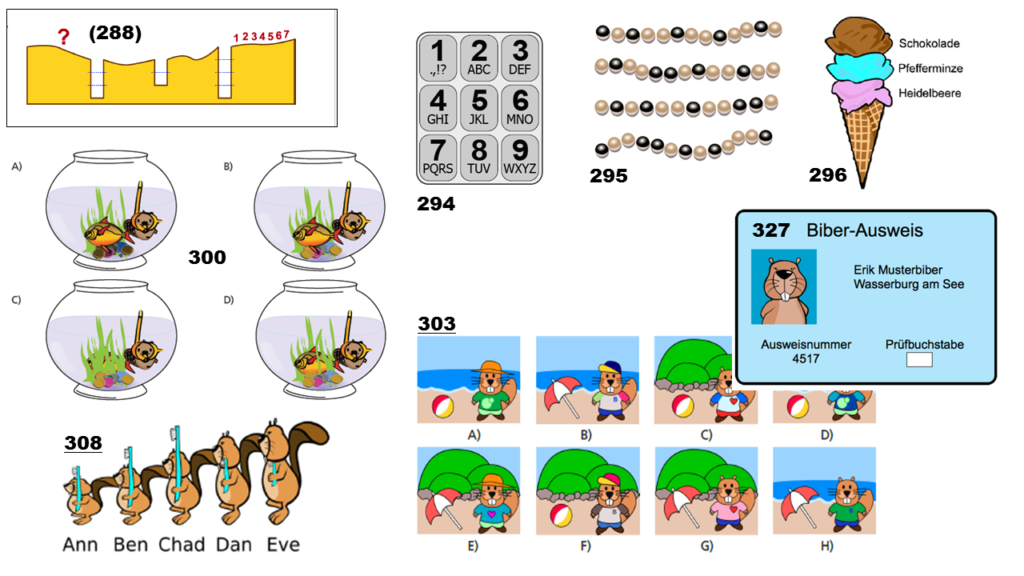

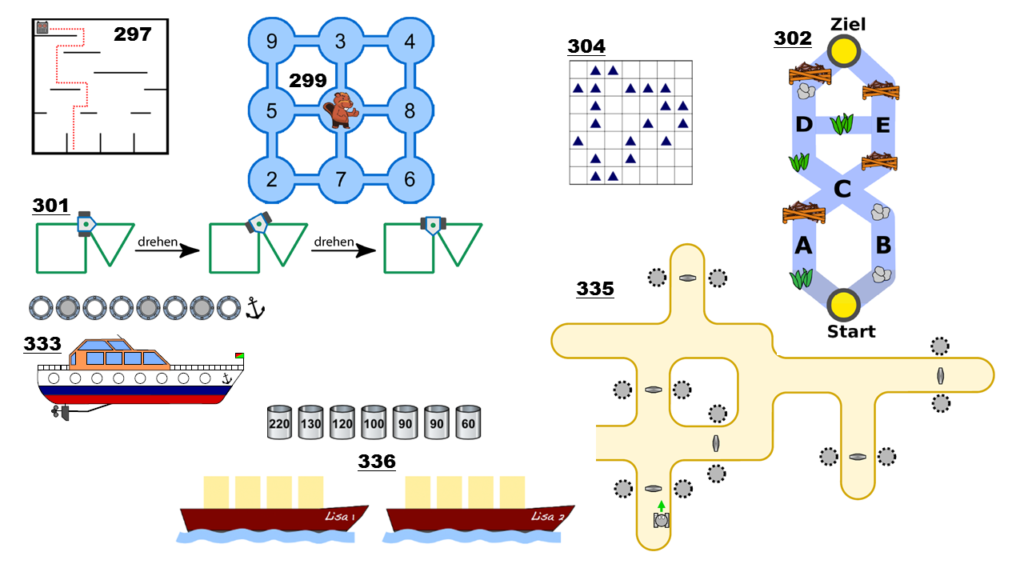

Assuming that each task needs to attract attention, its first impression is likely to be crucial. Therefore, its graphical elements like pictures or diagrams are highly relevant. Looking at the graphical elements of the Girls’ Tasks, we found that these were mostly representing animals, jewelry or food (see Fig. 1). Regarding the Boys’ tasks, the dominating elements were mostly abstract rectangular figures, graphs or technical apparel. The Neutral Tasks had an appearance that is more or less similar to the Boys Tasks (see Fig. 2).

Figure 1. Girls’ Tasks Pictures

Figure 2. Boys’ Tasks Pictures

In this context, relevance is likely to be determined by the probability that the participants or their friends have experienced or will experience a similar situation. Obviously, all of the Girls Tasks showed a certain relevance, e.g. how to identify your own bracelet. On the other hand, all of the Boys Tasks lacked this relevance at least for girls, e.g. how can a robot cross a labyrinth. Also the Neutral Tasks missed relevance more or less, except a few that didn’t look attractive or were too difficult (see below).

Third, the apparent difficulty (as assessed by the participants) of a task will influence the motivation or the confidence to solve it. Indeed we found that the girls tended to perform significantly better than the boys in tasks of low or medium difficulty. The explanation might be that the self-efficacy of girls was lower compared to the boys. On the other hand, the boys seemed to show higher willingness to deal with challenging problem solving activities by trial and error.

Yet, we should keep in mind that these results emerged in a contest, where the decision to work on a certain task was voluntary, in contrary to compulsory assignments in the classroom. Nevertheless, CS educators might use these findings to construct tasks that motivate particularly younger girls:

- Look for a situation that is likely to occur in girls’ everyday life,

- Construct a task for this situation that is not too difficult, and

- Draw a nice picture that contains a person, an animal or other lovely objects to attract attention.

Peter Hubwieser, Technical University of Munich (Germany), taught math, physics and computer science at high schools until 2001. In 1995 he completed his doctoral studies in physics. In 2000 he acquired his postdoctoral teaching qualification (habilitation). In 2002 he was appointed to a professorship position at TUM. He has worked as visiting professor in Austria (Klagenfurt, Salzburg and Innsbruck), France (ENS in Paris and Rennes) and Michigan (MSU). His research activities focus on the empirical investigation of learning processes in computer science. His novel didactical approach triggered the introduction of computer science as a compulsory subject at Bavarian Gymnasiums in 2004.

Peter Hubwieser, Technical University of Munich (Germany), taught math, physics and computer science at high schools until 2001. In 1995 he completed his doctoral studies in physics. In 2000 he acquired his postdoctoral teaching qualification (habilitation). In 2002 he was appointed to a professorship position at TUM. He has worked as visiting professor in Austria (Klagenfurt, Salzburg and Innsbruck), France (ENS in Paris and Rennes) and Michigan (MSU). His research activities focus on the empirical investigation of learning processes in computer science. His novel didactical approach triggered the introduction of computer science as a compulsory subject at Bavarian Gymnasiums in 2004.

Elena Hubwieser and Dorothee Graswald completed their teacher education at TUM, where they have conducted this research in collaboration with Peter Hubwieser. Currently they are teaching math and computer science at Bavarian Gymnasiums.

The original paper was published in a Springer Proceedings Volume: Hubwieser, P., Hubwieser, E., & Graswald, D. (2016). How to Attract the Girls: Gender-Specific Performance and Motivation in the Bebras Challenge. In A. Brodnik & F. Tort (Eds.), Informatics in Schools: Improvement of Informatics Knowledge and Perception: 9th International Conference on Informatics in Schools: Situation, Evolution, and Perspectives, ISSEP 2016, Münster, Germany, October 13-15, 2016, Proceedings (pp. 40–52). Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-46747-4_4

For more CS education insights, view our blog.

Designing Assessments in the Age of Remote Learning

Comments Off on Designing Assessments in the Age of Remote Learning

As we start to ramp up our blog series via CSEdResearch.org, we reached out to Miranda Parker to learn about what she’s researching these days in K-12 CS Education. Her work is both timely and…well, read on to learn more!

Currently, I’m working as a postdoctoral scholar with a team at University of California, Irvine on two projects: NSF-funded CONECTAR and DOE-funded IMPACT. These projects aim to bring computational thinking into upper-elementary classrooms, with a focus on students designated as English Learners. Our work is anchored in Santa Ana Unified School District, in which 96% of students identify as Latino, 60% English Language Learners, and 91% of students on free and reduced lunch. There’s a lot of fantastic research that’s come out of these projects, notably the works of my colleagues at UCI that are worth a look.

My primary role in the project is to help with the assessments in the project. There are many interesting challenges to assessing computational thinking learning for upper-elementary students, which had grown more challenging by the time I started in April with emergency remote teaching. I want to share some challenges we’ve faced and are considering in our work, in part to start a conversation with the research community about best-practices in a worst-case learning situation.

The confounding variables have exponentially expanded. We always had to consider if assessment questions on computational thinking were also measuring math skills or spatial reasoning. Now we also have to wonder if our students got the question wrong not because they don’t understand the concept, but maybe their sibling needed to use the computer and so the student had to rush to finish, or there were a lot of distractions as their entire family worked and schooled from home.

Every piece of the work is online now. An important part of assessment work is conducting think-aloud interviews to check that the assessment aligns with research goals. This becomes difficult with a remote learning situation. You can no longer entirely read the body language of your participant, you have to contend with internet connectivity, and you may be in a situation that is not the ideal one-on-one environment for think-alouds.

Human-centered design has never been more critical. It’s one thing to design a pen-and-paper assessment to be given to fourth grade students in a physical classroom, where a teacher can proctor and watch each student and answer questions when needed. It’s a totally different thing to design an online survey to be taken by students asynchronously or possibly synchronously over a Zoom call with their teacher, who can’t see what their students are doing. Students know when they’re done with a test in person, but how do you make sure that nine-year-old’s finish an online survey and click that last button, thereby saving the data you’re trying to gather?

On the bright side, these challenges are not insurmountable. We did design an assessment, conduct cognitive interviews, and collect pilot study data. Our work was recently accepted as a research paper, titled “Development and Preliminary Validation of the Assessment of Computing for Elementary Students (ACES),” to the SIGCSE Technical Symposium 2021. We’re excited to continue to grow and strengthen our assessment even as our students remain in remote learning environments.

For more CS education insights, view our blog.

Miranda Parker is a Postdoctoral Scholar at the University of California, Irvine, working with Mark Warschauer. Her research is in computer science education, where she is interested in topics of assessment, achievement, and access. Dr. Parker received her B.S. in Computer Science from Harvey Mudd College and her Ph.D. in Human-Centered Computing from the Georgia Institute of Technology, advised by Mark Guzdial. She has previously interned with Code.org and worked on the development of the K-12 CS Framework. Miranda was a National Science Foundation Graduate Research Fellow and a Georgia Tech President’s Fellow. You can reach Miranda at miranda.parker@uci.edu.

Miranda Parker is a Postdoctoral Scholar at the University of California, Irvine, working with Mark Warschauer. Her research is in computer science education, where she is interested in topics of assessment, achievement, and access. Dr. Parker received her B.S. in Computer Science from Harvey Mudd College and her Ph.D. in Human-Centered Computing from the Georgia Institute of Technology, advised by Mark Guzdial. She has previously interned with Code.org and worked on the development of the K-12 CS Framework. Miranda was a National Science Foundation Graduate Research Fellow and a Georgia Tech President’s Fellow. You can reach Miranda at miranda.parker@uci.edu.

Pressing Research Questions from Practitioners

Comments Off on Pressing Research Questions from PractitionersDuring EdCon 2019 held in Las Vegas, Chris Stephenson, Head of Computer Science Education Strategy for Google, met with a group of practitioners and policy makers to learn what research questions they would like to see answered.

First, they asked the group to brainstorm questions for which they need answers and share all of their questions. Then they asked them to pick a most important one (as a group) for each category. The most important questions appear in bold below.

Most Pressing Research Questions Sourced from Practitioners and Policy Makers

EdCon 2019, Las Vegas

These research questions were collected from practitioners, researchers, and policy makers who attended the CS-ER session led by the Google CS Education Strategy Group at EdCon 2019:

Teachers

● What are the best practices for CS PD in terms of positively impacting student learning?

● Are there unique pedagogical approaches that best support CS learning?

● How do we sustain the CS teacher pipeline?

● How do we measure effective CS teaching?

● What core skills must teachers have to teach CS?

● How do we get more K-8 teachers involved in CS education?

● What is the resources model for sustained PD?

● How do we ensure the PD focuses on pedagogy?

● How do we continue/sustain CS training for teachers?

● What PD options are available for teachers and what are the most effective?

Learning

● How does early CS experience impact future interest in CS?

● What models of CS content delivery provide the largest impact on students learning (after school, in school discrete courses, in school integrated into other disciplines)?

● What data exists currently that demonstrates that the CS curriculum /instruction currently being delivered truly moves the needle?

● Is there a correlation between student engagement in CS and success in other academic areas?

● How does learning CS lead to learning in other disciplines (especially math and science)?

● What are the best IDEs for supporting student learning?

● Are there different pedagogies more suitable/effective for direct instruction versus integrated instruction?

● How does exposure to CS in school impact students who are non-CS majors in university?

● How do industry mentors and the pipeline issues affect CS student identity?

Integration

● What does CS immersion look like in K-5?

● What is the best way to integrate CS in K-12?

● What are the implementation options for CS in elementary school?

Addressing Disparities

● What are the best methods for scaffolding CS concepts for students with academic deficiencies or disabilities?

Policy

● Which policy items provide the best environment for additional progress (which is the firestarter)?

● How do we scale CS in a way that avoids the unexpected negative consequences of some policies?

Advocacy

● Why do some districts jump on board with CS while others hold back?

● How do we reach a common definition of computer science that resonates for school districts and states?

● Who/what are the gatekeepers to CS and how do we remove them?

● How do we help school leadership successfully advocate for CS?

● How do we inform parents (especially from underrepresented populations) about the importance of CS?

● How do we develop parent advocates?

● How can higher ed support extended learning/expansion of content for CS?

To cite this reference, please use:

Stephenson, Chris. “Most Pressing Research Questions Sourced from Practitioners and Policy Makers from EdCon 2019.” CS Education Research Resource Center, CSEdResearch.org, 15 July 2020.

For more CS education insights, go to our blog.