This article discusses one of our recently published papers on measuring CS Standards for Teachers. You can read our open access paper in full here.

Our SIGCSE Virtual publication, Piloting a Revised Diagnostic Tool for CSTA Standards for CS Teachers, is now available for your reading pleasure. This paper, written by Laycee Thigpen (IACE), Monica McGill (IACE), BT Twarek (CSTA) and Amanda Bell (CSTA), was published in December 2024.

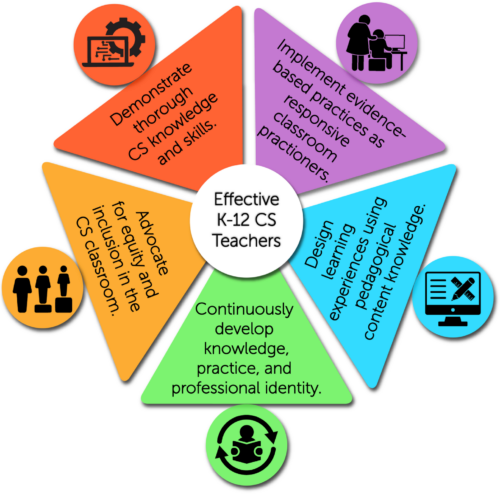

CSTA CS Standards for Teachers

In this study, we consider a way to empirically measure teacher competencies against the CSTA Standards for Teachers. The process required teachers to provide a set of artifacts and to justify how they teach to one or more of the rubric items that we created. Piloted in 2022, we revised and retested the tool in 2023 with a different set of teachers and investigated four areas: Plan(ning CS teaching), Teach(ing CS), Assess(ing students’ CS learning), and Grow(ing as a CS teacher).

Our research question for this project was: How effective is a diagnostic tool for recommending areas of growth for teachers that are aligned with CS Standards for teachers (standards 2-5)?

In this paper, we discuss our revisions of that instrument and our processes for retesting, including an example of one item.

The paper provides more details about the methods and results. However, one of the most interesting aspects of this work is how we measured teacher competencies. First, we collected artifacts that teachers provided and evaluated them against rubric items we created. We aligned these rubric items with the CS Standards for Teachers. Second, we asked teachers to complete a set of self-assessment items. This allowed them to reflect on their own competencies and rate themselves against the same standards.

We designed this approach to compare two processes. First, we used a thorough and tedious process of collecting data and conducting individual reviews of the artifacts. We also looked at the results from the self-assessments.

Interestingly and unsurprisingly, the results of the artifacts and the results of the self assessment form did not correlate. In fact, in some cases they negatively correlated. Accordingly, this supports the notion that is well supported in education research: Self-assessment is not as effective as a tool as testing for knowledge in some way.

This may be primarily due to what is known as the Dunning Kruger effect. The Dunning Kruger effect essentially states that we don’t know what we don’t know. Indeed, teachers may have rated themselves more highly on the self-assessment because they may not understand the extent of what they do not know across each item. We recognize there are many reasons the artifact review and self-assessment results did not correlate. One reason could be that the instruments were not valid. Despite this, we encourage a more empirical approach to evaluating teachers. Teacher evaluations should focus on evidence, not just what they think they need to know to teach computer science.

You can read our open access paper in full here.

Thigpen, L., McGill, M. M., Twarek, B., & Bell, A. (2024, December). Piloting a Revised Diagnostic Tool for CSTA Standards for CS Teachers. In Proceedings of the 2024 on ACM Virtual Global Computing Education Conference V. 1 (pp. 207-213).