Join IACE at the 2026 SIGCSE Technical Symposium on Computer Science Education

We’re looking forward to sharing our research and learning from others at the SIGCSE Technical Symposium in St. Louis February 18-21, 2026 in St. Louis, Missouri. The Technical Symposium (TS) is a fantastic event for being in community with hundreds of researchers and educators as we all continue to understand how we can advance and grow the field of computing education.

We’ve put together information about where we will be presenting our research throughout the event below. Hope to see you there!

| Date and Time | Title | Presenter/Authors | Room Number |

|---|---|---|---|

| Feb 18, 1-4:30pm | Affiliated Event (registration required): Guidelines and Tools for Using AI in Computer Science Education Research | Monica McGill, Julie Smith | 103-104 |

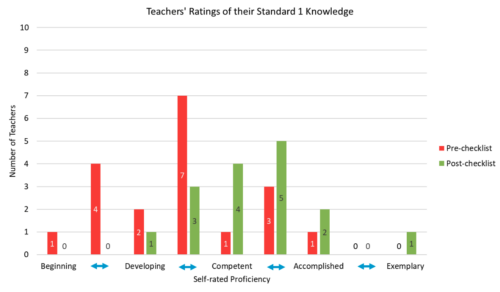

| Feb 19, 10:40 AM | Piloting a Vignettes Assessment to Measure K-5 CS Teacher Proficiencies and Growth | Joseph Tise (IACE), Monica McGill (IACE), Vicky Sedgwick (Visions by Vicky), Laycee Thigpen (IACE), Amanda Bell (Computer Science Teachers Association) | 260-267 |

| Feb 19, 1:40 PM | Special Session: Challenges to Computing Education in Uncertain Times: A Community Discussion | Yesenia Velasco (Duke University), Monica McGill (IACE), Geoffrey Challen (UIUC) | 275 |

| Feb 20, 10:00 AM | Poster: Defining Gaps in Student Affect Research for Computer Science | Julie Smith, Monica McGill, Precious Eze, Charity Odetola (all IACE) | Hall 1 |

| Feb 20, 10:40 AM | Google Session Two: Integrating AI in Education and Research: If, When, and How to Ensure Learning Fidelity Today to Shape a Holistic Future of Computing Education | Monica McGill (IACE) | 230 |

| Feb 20, 11:40 AM | Lightning Talk: Responsible AI Use in Computer Science Education Research | Julie Smith (IACE), Monica McGill (IACE) | 241-242 |

| Feb 20, 3:00 PM | Poster: Motivational Differences to Learn Computer Science Among Middle School Boys and Girls | Joe Tise (IACE), Monica McGill (IACE) | Hall 1 |

| Feb 20, 3:00 PM | Poster: Pathways to Teaching K12 Computer Science: Implications of a Pilot Study of Ten States | Julie Smith (IACE), Jennifer Rosato (Northern Lights Collaborative) | Hall 1 |

| Feb 21, 10:00 AM | Poster: Building Trust in a Computer Science Professional Development Passport for K-12 Teachers | Joseph Tise (IACE), Monica McGill (IACE), Robert Schwarzhaupt (AIR) | Hall 1 |

| Feb 21, 10:40 AM | A Longitudinal Pilot Study Exploring the Impacts of Coaching for Equity on Computer Science Teachers | Jennifer Rosato (Northern Lights Collaborative), Joseph Tise (IACE), Monica McGill (IACE), Megan Deiger (Consultant) | 100 |